When browsing short videos recently, have you also encountered such a headache: AI-generated videos always stop abruptly after just 5 seconds? This "short video curse" that has been a headache for global developers has been cracked by a group of Tsinghua scholars with just one line of code.

The Breakthrough Path of Chinese AI

In the past three years, AI video generation technology has made rapid progress. From Meta's Make-A-Video to Wan2.1, American enterprises have firmly grasped the technological discourse power. Domestic developers can only make some tweaks under overseas frameworks and are even labeled as "copiers". It wasn't until last year that the Tsinghua team, in cooperation with Shengshu Technology, launched the domestic video large model Vidu, achieving the generation of 16-second high-definition videos for the first time, truly breaking the technological monopoly.

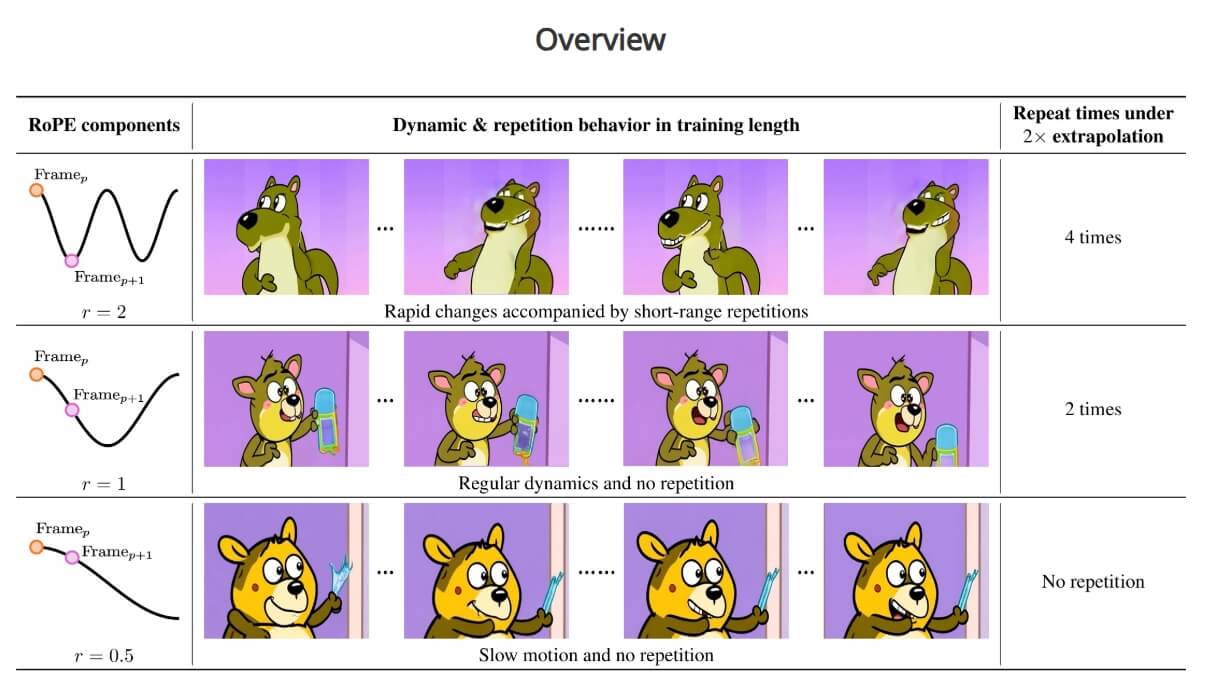

But users' demands are endless. Five seconds is too short, and ten seconds is just right. This simple wish became the starting point for technical research. The team led by Professor Zhu Jun from Tsinghua University found that existing models are limited by the periodicity of positional encoding, resulting in more prone to repetition as the generated video gets longer. They adopted reverse thinking and creatively adjusted the frequency parameters of RoPE encoding, enabling the model to "remember" longer temporal information.

The "Magic" of One Line of Code: The Birth of RIFLEx

In March of this year, the solution RIFLEx, which only requires one line of code, made a stunning debut. It is like installing a "temporal telescope" on video generation models. Without the need for retraining, it can double the generation duration of mainstream models such as CogvideoX and Hunyuan to 10 seconds. Even more astonishingly, it also supports picture expansion. For example, it can seamlessly expand a 9:16 vertical screen video into a widescreen one and even "imagine" scenes outside the frame.

This technology has caused a sensation overseas. Renowned blogger Ak commented, "This is a revolutionary breakthrough in the field of video diffusion models." Community developers spontaneously integrated RIFLEx into tools such as Stable Diffusion. A French developer excitedly said on the forum, "The Chinese team has taught us how to gracefully break through technical boundaries."

The Significance of RIFLEx

The birth of RIFLEx reflects the deep logic of China's AI development. Unlike some enterprises' "working in isolation", the Tsinghua team chose to open source the code at the first time. This open attitude is particularly precious after the Stanford team's plagiarism incident - last year, a certain American university was exposed for misappropriating the open source model architecture of the Tsinghua system and finally ended with an apology.

"Open source is not charity, but the only way to build an ecosystem." Dr. Zhao Min, the core developer of the project, said in an interview. It is this sharing spirit that has enabled the Vidu model to serve tens of millions of users in just half a year and also allowed RIFLEx to be cited by developers from more than 20 countries in just two weeks.

The Future is here: When Technology Shines into Reality

Looking back in the spring of 2025, the development trajectory of Chinese AI is clearly visible: from the helplessness of being embargoed to the prosperity of the open source ecosystem; from the doubts of "copying and improving" to the breakthrough of "reverse output". A line of code of RIFLEx not only rewrites the rules of video generation, but also marks that China's voice in the field of AI basic research is being reshaped.

Perhaps as Professor Zhu Jun said in the open source statement: "True innovation always grows in an open soil." When more and more Chinese technologies go to the world like RIFLEx, what we expect is not only technological breakthroughs, but also a future defined by innovation.

RIFLEx Quick Experience Guide

If you want to experience the magical effect of RIFLEx firsthand, TT Video help you, you can follow these steps:

Obtain the code

Visit the GitHub open source repository (https://github.com/thu-ml/RIFLEx), and complete the environment configuration according to the instructions in the Readme. The code is compatible with the PyTorch and Diffusers frameworks and is friendly to hardware requirements. Graphics cards such as RTX 3060 and above can run smoothly.

Conclusion

The emergence of RIFLEx marks a major breakthrough in the field of video generation using Chinese AI technology. Through a simple and elegant one-line code solution, the Tsinghua team not only broke the limitation of AI video length, but also provided an open innovation platform for developers around the world. With the continuous development and application of this technology, we can look forward to more amazing possibilities for AI video creation.

How to Use Image to Video

If you're interested in generating ai videos with images, you can click here to use it quickly.

Start for Free